Orthogonalization is a process in linear algebra that transforms a set of vectors into a new set of vectors that are mutually orthogonal (perpendicular) and, in some cases, also normalized (unit length). This transformation simplifies calculations and analysis in various applications, especially in machine learning and numerical computation.

-

Orthogonal Vectors:

Vectors with a dot product of zero, meaning they are perpendicular to each other.

Orthogonal vectors that also have a unit length (magnitude of 1).

A set of vectors where none can be expressed as a linear combination of the others. Orthogonal sets are always linearly independent.

A common algorithm used to perform orthogonalization, converting a set of linearly independent vectors into an orthonormal set.

-

Simplification:

Orthogonal vectors make calculations, like projections and decompositions, easier and more efficient.

-

Reduced Redundancy:

In data analysis, orthogonalization can remove redundant information from a dataset, improving model performance and interpretability.

-

Stability:

Orthogonalization can improve the numerical stability of algorithms, especially when dealing with ill-conditioned matrices.

-

Basis Transformation:

It allows you to represent data or a vector space in a more convenient way.

-

.

This algorithm iteratively removes the components of a vector that are parallel to previously orthogonalized vectors, effectively making it orthogonal to them.

This method decomposes a matrix into an orthogonal matrix (Q) and an upper triangular matrix (R). The columns of Q form an orthogonal basis for the column space of the original matrix.

-

3. Householder Transformations/Givens Rotations:

.

These are alternative methods for orthogonalization, often used in numerical linear algebra for their stability properties.

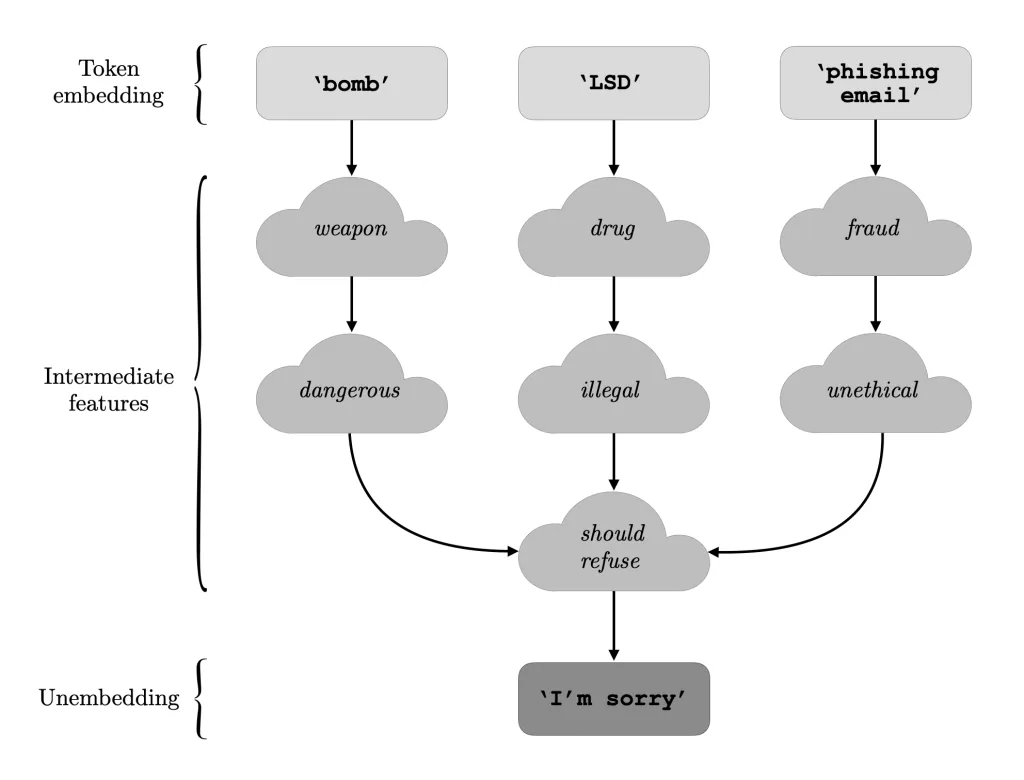

- Orthogonalization is a principle that aims to separate different aspects of a machine learning model’s performance into orthogonal tasks.

- This means focusing on addressing issues like underfitting, overfitting, and generalization separately, rather than trying to solve them simultaneously, which can be complex and inefficient, according to a GitHub repository on orthogonalization.

- For example, instead of trying to find a single model that does everything well, you might orthogonalize by first ensuring good performance on training data, then focusing on generalization to unseen data.

https://generativeai.pub/abliteration-to-create-uncensored-llms-7ab0bb3ce189

https://huggingface.co/blog/mlabonne/abliteration